I have a Seagate GoFlex Net with two 2TB harddrives attached to it via SATA.

The device itself is connected to my PC via its Gigabit Ethernet connection. It

houses a Marvell Kirkwood at 1.2GHz and 128MB. I am booting Debian from a USB

stick connected to its USB 2.0 port.

The specs are pretty neat so I planned it as my NAS with 4TB of storage being

attached to it. The most common use case is the transfer of big files (1-10 GB)

between my laptop and the device.

Now what are the common ways to achieve this?

scp:

scp /local/path user@goflex:/remote/path

rsync:

rsync -Ph /local/path user@goflex:/remote/path

sshfs:

sshfs -o user@goflex:/remote/path /mnt

cp /local/path /mnt

ssh:

ssh user@goflex "cat > /remote/path" < /local/path

I then did some benchmarks to see how they perform:

scp: 5.90 MB/s

rsync: 5.16 MB/s

sshfs: 5.05 MB/s

ssh: 5.42 MB/s

Since they all use ssh for transmission, the similarity of the result does not

come as a surprise and 5.90 MB/s are also not too shabby for a plain scp. It

means that I can transfer 1 GB in a bit under three minutes. I could live with

that. Even for 10 GB files I would only have to wait for half an hour which is

mostly okay since it is mostly known well in advance that a file is needed.

But lets see if we can somehow get faster than this. Lets analyze where the

bottleneck is.

Lets have a look at the effective TCP transfer rate with netcat:

ssh user@goflex "netcat -l -p 8000 > /dev/null"

dd if=/dev/zero bs=10M count=1000 netcat goflex 8000

79.3 MB/s wow! Can we get more? Lets try increasing the buffer size on both

ends. This can be done using nc6 with the -x argument on both sides.

ssh user@goflex "netcat -x -l -p 8000 > /dev/null"

dd if=/dev/zero bs=10M count=1000 netcat -x gloflex 8000

103 MB/s okay this is definitely NOT the bottleneck here.

Lets see how fast I can read from my harddrive:

hdparm -tT /dev/sda

114.86 MB/s.. hmm... and writing to it?

ssh user@goflex "time sh -c 'dd if=/dev/zero of=/remote/path bs=10M count=100; sync'"

42.93 MB/s

Those values are far faster than my puny 5.90 MB/s I get with scp. A look at

the CPU usage during transfer shows, that the ssh process is at 100% CPU usage

the whole time. It seems the bottleneck was found to be ssh and the

encryption/decryption involved.

I'm transferring directly from my laptop to the device. Not even a switch is in

the middle so encryption seems to be quite pointless here. Even authentication

doesnt seem to be necessary in this setup. So how to make the transfer

unencrypted?

The ssh protocol specifies a null cipher for not-encrypted connections. OpenSSH

doesnt support this. Supposedly, adding

"none", SSH_CIPHER_NONE, 8, 0, 0, EVP_enc_null

to cipher.c adds a null cipher but I didnt want to patch around in my

installation.

So lets see how a plain netcat performs.

ssh user@goflex "netcat -l -p 8000 > /remote/path"

netcat goflex 8000 < /local/path

32.9 MB/s This is far better! Lets try a bigger buffer:

ssh user@goflex "netcat -x -l -p 8000 > /remote/path"

netcat -x goflex 8000 < /local/path

37.8 MB/s now this is far better! My Gigabyte will now take under half a minute

and my 10 GB file under five minutes.

But it is tedious to copy multiple files or even a whole directory structure

with netcat. There are far better tools for this.

An obvious candidate that doesnt encrypt is rsync when being used with the

rsync protocol.

rsync -Ph /local/path user@goflex::module/remote/path

30.96 MB/s which is already much better!

I used the following line to have the rsync daemon being started by inetd:

rsync stream tcp nowait root /usr/bin/rsync rsyncd --daemon

But it is slower than pure netcat.

If we want directory trees, then how about netcatting a tarball?

ssh user@goflex "netcat -x -l -p 8000 tar -C /remote/path -x"

tar -c /local/path netcat goflex 8000

26.2 MB/s so tar seems to add quite the overhead.

How about ftp then? For this test I installed vsftpd and achieved a speed of

30.13 MB/s. This compares well with rsync.

I also tried out nfs. Not surprisingly, its transfer rate is up in par with

rsync and ftp at 31.5 MB/s.

So what did I learn? Lets make a table:

</dr></dr></dr></dr></dr></dr></dr></dr></dr></dr>

| method | speed in MB/s |

|---|

| scp | 5.90 |

| rsync+ssh | 5.16 |

| sshfs | 5.05 |

| ssh | 5.42 |

| netcat | 32.9 |

| netcat -x | 37.8 |

| netcat -x tar | 26.2 |

| rsync | 30.96 |

| ftp | 30.13 |

| nfs | 31.5 |

For transfer of a directory structure or many small files, unencrypted rsync

seems the way to go. It outperforms a copy over ssh more than five-fold.

When the convenience of having the remote data mounted locally is needed, nfs

outperforms sshfs at speeds similar to rsync and ftp.

As rsync and nfs already provide good performance, I didnt look into a more

convenient solution using ftp.

My policy will now be to use rsync for partial file transfers and mount my

remote files with nfs.

For transfer of one huge file, netcat is faster. Especially with increased

buffer sizes it is a quarter faster than without.

But copying a file with netcat is tedious and hence I wrote a script that

simplifies the whole remote-login, listen, send process to one command. First

argument is the local file, second argument is the remote name and path just as

in scp.

#!/bin/sh -e

HOST=$ 2%%:*

USER=$ HOST%%@*

if [ "$HOST" = "$2" -o "$USER" = "$HOST" ]; then

echo "second argument is not of form user@host:path" >&2

exit 1

fi

HOST=$ HOST#*@

LPATH=$1

LNAME= basename "$1"

RPATH= printf %q $ 2#*: /$LNAME

ssh "$USER@$HOST" "nc6 -x -l -p 8000 > $RPATH" &

sleep 1.5

pv "$LPATH" nc6 -x "$HOST" 8000

wait $!

ssh "$USER@$HOST" "md5sum $RPATH" &

md5sum "$LPATH"

wait $!

I use pv to get a status of the transfer on my local machine and ssh to login

to the remote machine and start netcat in listening mode. After the transfer I

check the md5sum to be sure that everything went fine. This step can of course

be left out but during testing it was useful. Escaping of the arguments is done

with

printf %q.

Problems with the above are the sleep, which can not be avoided but must be

there to give the remote some time to start netcat and listen. This is unclean.

A next problem with the above is, that one has to specify a username. Another

is, that in scp, one has to double-escape the argument while above this is not

necessary. The host that it netcats to is the same as the host it ssh's to.

This is not necessarily the case as one can specify an alias in ~/.ssh/config.

Last but not least this only transfers from the local machine to the remote

host. Doing it the other way round is of course possible in the same manner but

then one must be able to tell how the local machine is reachable for the remote

host.

Due to all those inconveniences I decided not to expand on the above script.

Plus, rsync and nfs seem to perform well enough for day to day use.

I have a Seagate GoFlex Net with two 2TB harddrives attached to it via SATA.

The device itself is connected to my PC via its Gigabit Ethernet connection. It

houses a Marvell Kirkwood at 1.2GHz and 128MB. I am booting Debian from a USB

stick connected to its USB 2.0 port.

The specs are pretty neat so I planned it as my NAS with 4TB of storage being

attached to it. The most common use case is the transfer of big files (1-10 GB)

between my laptop and the device.

Now what are the common ways to achieve this?

scp:

I have a Seagate GoFlex Net with two 2TB harddrives attached to it via SATA.

The device itself is connected to my PC via its Gigabit Ethernet connection. It

houses a Marvell Kirkwood at 1.2GHz and 128MB. I am booting Debian from a USB

stick connected to its USB 2.0 port.

The specs are pretty neat so I planned it as my NAS with 4TB of storage being

attached to it. The most common use case is the transfer of big files (1-10 GB)

between my laptop and the device.

Now what are the common ways to achieve this?

scp:

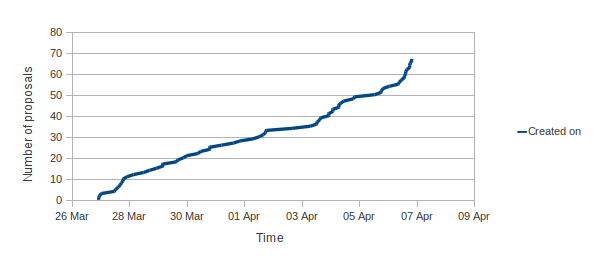

This year our efforts have paid off and despite there being more mentoring organizations than there were in 2011 (175 in 2011 and 180 in 2012), this year in Debian we got 81 submissions versus 43 submissions in 2011.

This year our efforts have paid off and despite there being more mentoring organizations than there were in 2011 (175 in 2011 and 180 in 2012), this year in Debian we got 81 submissions versus 43 submissions in 2011. The result is this year we ll have 15 students in Debian versus 9 students last year! Without further ado, here is the list of projects and student who will be working with us this summer:

The result is this year we ll have 15 students in Debian versus 9 students last year! Without further ado, here is the list of projects and student who will be working with us this summer: